According to Wikipedia: Bots are software applications that run automated tasks (scripts) over the internet. You can either say there are “good” and “bad” bots, or you can say that there’s only the “good” or “bad” intent of their user. Either way, the bots we’re talking about here—the “bad” malicious ones, the ones that cause harm and damage—have started evolving in the 1990s, and soon after followed the development of protective mechanisms.

You see, bots are good at imitating real human activity (and are getting better at it every day). They are also better than humans at doing multiple tasks quickly. Therefore, people have noticed they can automate many laborious human tasks. What we’re concerned with here is the automation of tasks that lead to damage of your website infrastructure, finances, personal information, etc., and, more importantly, the protection against such attempts.

DDoS Attacks – First Uses of Bot Networks for Causing Damage

The initial bot protection was designed to prevent and deal with distributed denial of service attacks (more commonly known as DDoS attacks). DDoS attacks are cyber-attacks whose main objective is to make the targeted website (or a service) unavailable to the users it was intended for. DDoS attacks are carried out by having a network of bots (botnet) connected to the internet and sending a large number of requests toward a targeted IP address. If the attack is successful, the targeted website can’t provide the services it’s supposed to, hence the name, denial of service.

In many countries, these types of attacks are considered illegal.

From a technical standpoint, there are numerous ways of conducting a DDoS attack, and the attackers are getting only more imaginative. One distinction we’ll point out here is that besides DDoS attacks, there are DoS attacks, which are mounted from a single host. Simultaneously though, people who work on developing defense mechanisms against these types of attacks are getting better at it every day.

One of the first bots which was made for the purpose of carrying out these attacks was the Global Threat bot (shortened GTbot), also known as Aristotle’s Trojan. It is an IRC (Internet Relay Chat) bot that is in a large majority of the cases installed on users’ computers without their knowledge. Once installed, it turns those computers into “soldiers”, which can later be used for launching massive DDoS attacks. GTBot was able to perform simple DDoS attacks, by running custom scripts in response to IRC events.

So, what were some of the most significant defensive measures taken against DDoS attacks?

Single-host DoS and multiple IPs DDoS attacks

Let’s start with the simple ones first. If the attack is mounted from a single host —a simple DoS attack — then one way of defending would be to block the IP address from which the attack originated. Now, while this may work against single-hosted attacks, it fails to give results against attacks that are carried out through botnets that include over a million infected computers (ie. individual bots).

There are multiple ways of conducting attacks with botnets but the most common one is when a so-called “bot-herder” organizes an attack through numerous proxies. This makes it virtually impossible to deal with an attack by blocking IP addresses. However, attacking through proxies can cost a lot of money and it’s a significant investment on the attacker’s side.

A more recent method of conducting DDoS attacks implies that bots can access the IP addresses of residential users, making it practically impossible to base the protection on the attacker’s IP address. This type of attack is more difficult to deal with. However, it is also much more expensive to conduct.

Inefficiency of UserAgent-based protection

Seeing how relying on IP address blocking is ineffective, people have resorted to other means. One of them is UserAgent-based protection. But, first of all, what are UserAgents anyway?

Simply put, UserAgent is a property that anyone (or anything) who visits your site through a browser needs to have. It’s used for identification purposes, alongside the visitor’s IP address. On the regular browsers, UserAgents are typically labeled ‘Mozilla Firefox’, ‘Safari’, etc. It can also include information such as the version of your browser, information about your system, and even some additional information about your browser.

Your initial thought now may be – “Well, OK, I can see which UserAgent labels are unwanted traffic and I can block them.” It’s not that easy. See, bots can arbitrarily set UserAgent values and change them easily. This makes their signature much more difficult to detect.

Making a model of expected Human behavior

Let’s now talk about smarter ways of protecting against bots.

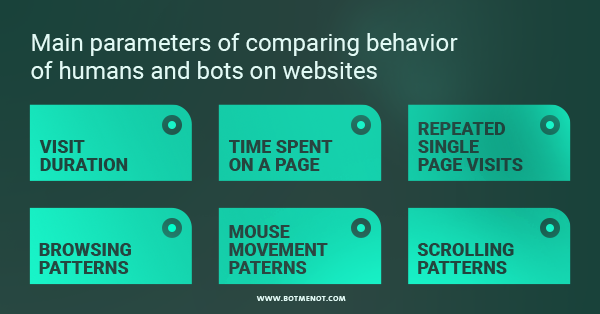

People tasked with defense against bots have come up with an interesting behavioral solution. Namely, they’ve started making models of expected human behavior, based on data that they were certain was generated by real human visitors. They tracked

- visit durations,

- how much time a visitor spent on one page,

- did they visit only a single page multiple times,

- did they browse through the website,

- mouse moving patterns,

- scrolling patterns.

Once they’ve gathered enough information they started comparing visits to certain models they came up with. If drastically different behavior compared to expected was noticed there was a high probability of that visit being from a bot. What was the course of action in those cases?

There are multiple ways of approaching the issue of bot protection. Some of the most frequently used solutions are either external, in-app, or in the form of a Web/App server plugin. Also, in some cases, you may be limited in terms of what types of defenses you’re able to use. For example, if you are selling on Shopify, you will be able to use only the solutions against bots that are already used by Shopify.

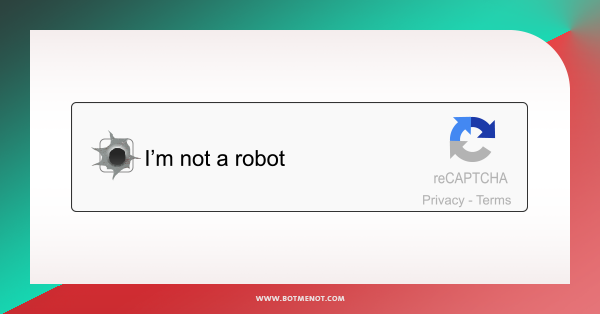

It’s imperative that you don’t block out human traffic from your website, though. How can that happen, you may ask? It’s the case of a so-called “false positive”. Let’s stay with the example of online selling – only, in this case, you have your own eCommerce platform. You install certain bot protection software and it presents suspicious visitors with a CAPTCHA test. If it’s a real human visiting your website, they might get discouraged after a few tries and go to another website. Very important as well is that CAPTCHA essentially blocks any visually impaired users from accessing your website.

CAPTCHA and other approaches – still not bulletproof

What you need to have in mind also is that there are bots who can actually resolve CAPTCHAs very efficiently. The most efficient approaches are employing machine learning so the bots are able to solve standard letter recognition CAPTCHAs. Furthermore, bots are by the day more easily able to bypass the image recognition tests and the checkbox tests. Besides CAPTCHA, what you can also do is show an ‘Access denied’ page.

There is another thing you can do, and it actually doesn’t include blocking the bot traffic. If you think that someone is using bots to scrape information from your website (for example, prices on your eCommerce site) you can intentionally show wrong information to visitors for whom you’re certain are bots. This is very risky though because there’s never a 100% guarantee that a visit is coming from a bot. Therefore, there’s a slight chance that you show wrong information to a potential real customer.

Conclusion

We have shown how dangerous bots can be and that there’s still no bulletproof protection against them.

What you always want to know is how well protected your website is against bots, and how effective your protection measures are.

This is where BotMeNot comes in. There are multiple tests it can run on your website, ranging from rather “mild” ones, up to the most sophisticated ones, which use cutting-edge bot technology.

Please, do not hesitate when it comes to your website’s security. Run a free test on your website and see how satisfied you are with your protection.

If you think you’re at risk you can also choose to run more complex tests and decide what to do from there.